The world of speech synthesis has made impressive progress in Japan over the past year or two. Behind this development is the analysis and synthesis of human voices using deep learning, which has evolved to the point where it is no longer possible to tell whether a human or a machine is speaking. In fact, there have been many cases where a voice that was thought to be spoken by a person earlier was actually synthesized by a computer. Let me introduce what is actually happening now while sorting out the details.

Speech synthesis

Speech synthesis is rapidly evolving through deep learning, and speech synthesis is a cutting-edge technology, but its history is quite long. The first speech synthesizer is said to have been a mechanical speaking machine created by a Hungarian inventor named Wolfgang von Kempelen in 1791, which was capable of combining consonants and vowels to produce human-like voices.

However, computerized speech synthesis was not available until the 1960s. Bell Labs, an American telecommunications laboratory, used the IBM 7094 to perform speech synthesis and sing a song called “Daisy Bell”. A video recording of the singing voice is available on YouTube and other sites, so those interested should search for it and listen to it for themselves.

It is well known that Yamaha Corporation named the Daisy Project after the Daisy Bell after it started to research and develop singing voice synthesis, leading to VOCALOID in 2000.

I won’t go into the history of voice synthesis here, but I believe that VOICEROID, released by AHS Corporation in 2009, was the first time that voice synthesis became widely used on general PCs.

There had been some software before that, but VOICEROID attracted a lot of interest because it put the character at the forefront and allowed the character to speak in a fairly beautiful voice.

VOICEROID used an engine called AITalk, developed by A.I. Corporation, which became an explosive hit because it could speak fluently when Japanese text in a mixture of kanji and kana was input.

Incidentally, A.I. went public in 2018 as a software maker specializing in the research and development of speech synthesis. Before AITalk, AquesTalk, developed by Aquest, was released to the public in 2006, which led to later products such as Stickman-chan.

Such speech synthesis software is called waveform-connected speech synthesis or corpus-based speech synthesis. These software programs record the human voice, break it down into consonants, vowels, etc., and reconstruct it according to a corpus, a database of words, to produce speech.

In a sense, it can be said that the digitally recorded voice is being reproduced so that the characteristics of the original human voice can be reproduced realistically. In addition, by adding facial expressions to the recorded voice, such as happy, sad, or angry, it is possible to create a database for each emotion, making it possible to create speech synthesis with emotional expression.

Nevertheless, the problem is that the installation size becomes large because a huge number of recorded voices are used as data for each of them. However, since a single voice with an emotional expression requires only a few GB, this may not be a major concern in this day and age.

On the other hand, AI speech synthesis and DNN parametric speech synthesis have been developed rapidly in the past year or two.

In addition, this is the same process of taking the earliest recording of a person’s speech and then labelling it, i.e., cutting it down by the position of consonants and vowels, and then having the computer deep-learn how the person is speaking. In this learning process, the computer learns how people pronounce the words, and then re-synthesizes the words based on that learning.

In this process, the pronunciation is made through a vocoder, which is a system that simulates the throat and mouth so that, as long as the parameters are available, synthesis can be performed.

The accuracy of these parameters has improved so much due to recent advances in deep learning that they are now so realistic that they can no longer be distinguished from a human voice.

The data size of the parameters is much smaller than that of recorded PCM data. Therefore, the installation size can be as small as 1/100 of the waveform connection type.

Free Software VOICEVOX:

This software is in the form of open-source freeware. Therefore, anyone can obtain it for free. When downloading the software, you can choose between Windows, Mac, or Linux, as well as between GPU/CPU mode and CPU mode. The GPU is also available.

This software was developed and released by Mr. Hiroshiba, who is currently an employee of Dwango, in his personal capacity. The list of contributors shows many names in addition to Mr. Hiroshiba’s, so it seems that many people are involved in the development of this software as open source.

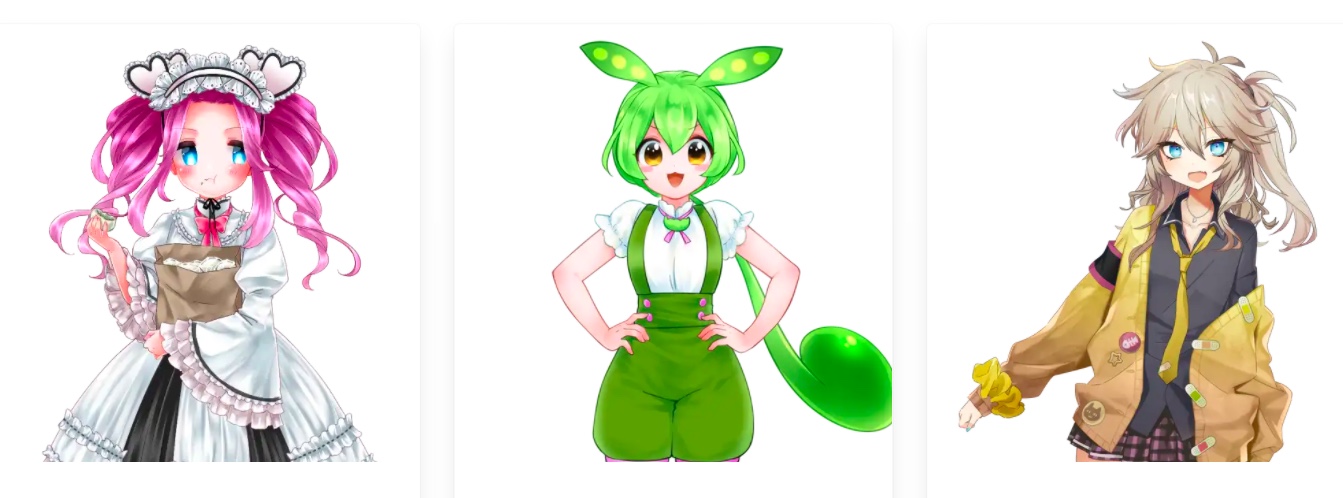

There are a total of nine characters, and depending on the character, multiple voices are available, including normal, sweet, tsuntsun, and sexy.

Although, English is not supported, it is possible to read out English words in Japanese.

As mentioned earlier, VOICEVOX is free software and can be used free of charge, including for commercial purposes. However, each voice library has its terms of use, and there are rules about crediting the library, so please check if you want to use it.

Comments